Contents

- File systems and network

- Contents of the VFS component

- Creating an IPC channel to VFS

- Including VFS functionality in a program

- Overview: startup parameters and environment variables of VFS

- Mounting file systems when VFS starts

- Using VFS backends to separate data streams

- Creating a VFS backend

- Dynamically configuring the network stack

File systems and network

In KasperskyOS, operations with file systems and the network are executed via a separate system program that implements a virtual file system (VFS).

In the SDK, the VFS component consists of a set of executable files, libraries, formal specification files, and header files. For more details, see the Contents of the VFS component section.

The main scenario of interaction with the VFS system program includes the following:

- An application connects via IPC channel to the VFS system program and then links to the client library of the VFS component during the build.

- In the application code, POSIX calls for working with file systems and the network are converted into client library function calls.

Input and output to file handles for standard I/O streams (stdin, stdout and stderr) are also converted into queries to the VFS. If the application is not linked to the client library of the VFS component, printing to stdout is not possible. If this is the case, you can only print to the standard error stream (stderr), which in this case is performed via special methods of the KasperskyOS kernel without using VFS.

- The client library makes IPC requests to the VFS system program.

- The VFS system program receives an IPC requests and calls the corresponding file system implementations (which, in turn, may make IPC requests to device drivers) or network drivers.

- After the request is handled, the VFS system program responds to the IPC requests of the application.

Using multiple VFS programs

Multiple copies of the VFS system program can be added to a solution for the purpose of separating the data streams of different system programs and applications. You can also separate the data streams within one application. For more details, refer to Using VFS backends to separate data streams.

Adding VFS functionality to an application

The complete functionality of the VFS component can be included in an application, thereby avoiding the need to pass each request via IPC. For more details, refer to Including VFS functionality in a program.

However, use of VFS functionality via IPC enables the solution developer to do the following:

- Use a solution security policy to control method calls for working with the network and file systems.

- Connect multiple client programs to one VFS program.

- Connect one client program to two VFS programs to separately work with the network and file systems.

Contents of the VFS component

The VFS component implements the virtual file system. In the KasperskyOS SDK, the VFS component consists of a set of executable files, libraries, formal specification files and header files that enable the use of file systems and/or a network stack.

VFS libraries

The vfs CMake package contains the following libraries:

vfs_fscontains implementations of the devfs, ramfs and ROMFS file systems, and adds implementations of other file systems to VFS.vfs_netcontains the implementation of the devfs file system and the network stack.vfs_impcontains thevfs_fsandvfs_netlibraries.vfs_remoteis the client transport library that converts local calls into IPC requests to VFS and receives IPC responses.vfs_serveris the VFS server transport library that receives IPC requests, converts them into local calls, and sends IPC responses.vfs_localis used to include VFS functionality in a program.

VFS executable files

The precompiled_vfs CMake package contains the following executable files:

VfsRamFsVfsSdCardFsVfsNet

The VfsRamFs and VfsSdCardFs executable files include the vfs_server, vfs_fs, vfat and lwext4 libraries. The VfsNet executable file includes the vfs_server, vfs_imp libraries.

Each of these executable files has its own default values for startup parameters and environment variables.

Formal specification files and header files of VFS

The sysroot-*-kos/include/kl directory from the KasperskyOS SDK contains the following VFS files:

- Formal specification files

VfsRamFs.edl,VfsSdCardFs.edl,VfsNet.edlandVfsEntity.edl, and the header files generated from them. - Formal specification file

Vfs.cdland the header fileVfs.cdl.hgenerated from it. - Formal specification files

Vfs*.idland the header files generated from them.

Libc library API supported by VFS

VFS functionality is available to programs through the API provided by the libc library.

The functions implemented by the vfs_fs and vfs_net libraries are presented in the table below. The * character denotes the functions that are optionally included in the vfs_fs library (depending on the library build parameters).

Functions implemented by the vfs_fs library

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Functions implemented by the vfs_net library

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

If there is no implementation of a called function in VFS, the EIO error code is returned.

Creating an IPC channel to VFS

In this example, the Client process uses the file systems and network stack, and the VfsFsnet process handles the IPC requests of the Client process related to the use of file systems and the network stack. This approach is utilized when there is no need to separate data streams related to file systems and the network stack.

The IPC channel name must be assigned by the _VFS_CONNECTION_ID macro defined in the header file sysroot-*-kos/include/vfs/defs.h from the KasperskyOS SDK.

Init description of the example:

init.yaml

Including VFS functionality in a program

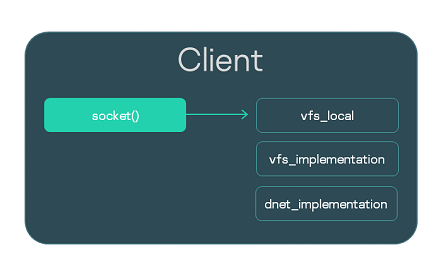

In this example, the Client program includes the VFS program functionality for working with the network stack (see the figure below).

VFS component libraries in a program

The client.c implementation file is compiled and the vfs_local, vfs_implementation and dnet_implementation libraries are linked:

CMakeLists.txt

In case the Client program uses file systems, you must link the vfs_local and vfs_fs libraries, and the libraries for implementing these file systems. In this case, you must also add a block device driver to the solution.

Overview: startup parameters and environment variables of VFS

VFS program startup parameters

-l <entry in fstab format>The startup parameter

-lmounts the defined file system.-f <path to fstab file>The parameter

-fmounts the file systems specified in thefstabfile. If theUNMAP_ROMFSenvironment variable is not defined, thefstabfile will be sought in the ROMFS image. If theUNMAP_ROMFSenvironment variable is defined, thefstabfile will be sought in the file system defined through theROOTFSenvironment variable.

Examples of using VFS program startup parameters

Environment variables of the VFS program

UNMAP_ROMFSIf the

UNMAP_ROMFSenvironment variable is defined, the ROMFS image will be deleted from memory. This helps conserve memory. When using the startup parameter-f, it also provides the capability to search for thefstabfile in the file system defined through theROOTFSenvironment variable instead of searching the ROMFS image.ROOTFS = <entry in fstab format>The

ROOTFSenvironment variable mounts the defined file system to the root directory. When using the startup parameter-f, a combination of theROOTFSandUNMAP_ROMFSenvironment variables provides the capability to search for thefstabfile in the file system defined through theROOTFSenvironment variable instead of searching the ROMFS image.VFS_CLIENT_MAX_THREADSThe

VFS_CLIENT_MAX_THREADSenvironment variable redefines the SDK configuration parameterVFS_CLIENT_MAX_THREADS._VFS_NETWORK_BACKEND=<VFS backend name>:<name of the IPC channel to the VFS process>The

_VFS_NETWORK_BACKENDenvironment variable defines the VFS backend for working with the network stack. You can specify the name of the standard VFS backend:client(for a program that runs in the context of a client process),server(for a VFS program that runs in the context of a server process) orlocal, and the name of a custom VFS backend. If thelocalVFS backend is used, the name of the IPC channel is not specified (_VFS_NETWORK_BACKEND=local:). You can specify more than one IPC channel by separating them with a comma._VFS_FILESYSTEM_BACKEND=<VFS backend name>:<name of the IPC channel to the VFS process>The

_VFS_FILESYSTEM_BACKENDenvironment variable defines the VFS backend for working with file systems. The name of the VFS backend and the name of the IPC channel to the VFS process are defined the same way as they are defined in the_VFS_NETWORK_BACKENDenvironment variable.

Default values for startup parameters and environment variables of VFS

For the VfsRamFs executable file:

For the VfsSdCardFs executable file:

For the VfsNet executable file:

Mounting file systems when VFS starts

When the VFS program starts, only the RAMFS file system is mounted to the root directory by default. If you need to mount other file systems, this can be done not only by calling the mount() function but also by setting the startup parameters and environment variables of the VFS program.

The ROMFS and squashfs file systems are intended for read-only operations. For this reason, you must specify the ro parameter to mount these file systems.

Using the startup parameter -l

One way to mount a file system is to set the startup parameter -l <entry in fstab format> for the VFS program.

In these examples, the devfs and ROMFS file systems will be mounted when the VFS program is started:

init.yaml.(in)

CMakeLists.txt

Using the fstab file from the ROMFS image

When building a solution, you can add the fstab file to the ROMFS image. This file can be used to mount file systems by setting the startup parameter -f <path to the fstab file> for the VFS program.

In these examples, the file systems defined via the fstab file that was added to the ROMFS image during the solution build will be mounted when the VFS program is started:

init.yaml.(in)

CMakeLists.txt

Using an "external" fstab file

If the fstab file resides in another file system instead of in the ROMFS image, you must set the following startup parameters and environment variables for the VFS program to enable use of this file:

ROOTFS. This environment variable mounts the file system containing thefstabfile to the root directory.UNMAP_ROMFS. If this environment variable is defined, thefstabfile will be sought in the file system defined through theROOTFSenvironment variable.-f. This startup parameter is used to mount the file systems specified in thefstabfile.

In these examples, the ext2 file system that should contain the fstab file at the path /etc/fstab will be mounted to the root directory when the VFS program starts:

init.yaml.(in)

CMakeLists.txt

Using VFS backends to separate data streams

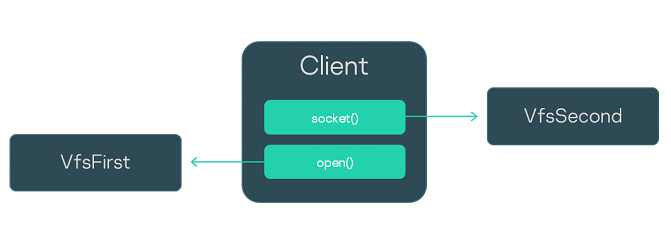

This example employs a secure development pattern that separates data streams related to file system use from data streams related to the use of a network stack.

The Client process uses file systems and the network stack. The VfsFirst process works with file systems, and the VfsSecond process provides the capability to work with the network stack. The environment variables of programs that run in the contexts of the Client, VfsFirst and VfsSecond processes are used to define the VFS backends that ensure the segregated use of file systems and the network stack. As a result, IPC requests of the Client process that are related to the use of file systems are handled by the VfsFirst process, and IPC requests of the Client process that are related to network stack use are handled by the VfsSecond process (see the figure below).

Process interaction scenario

Init description of the example:

init.yaml

Creating a VFS backend

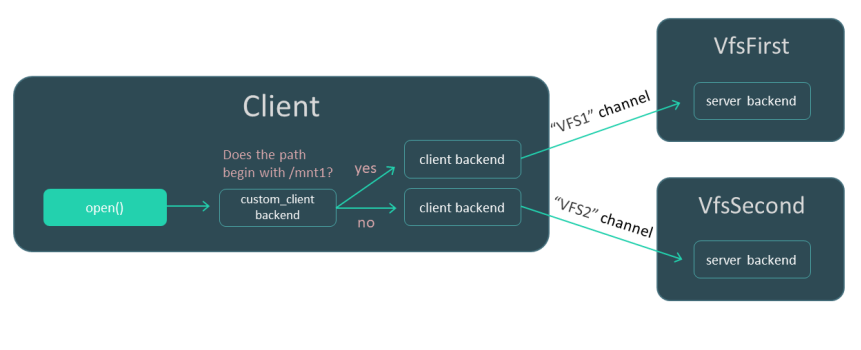

This example demonstrates how to create and use a custom VFS backend.

The Client process uses the fat32 and ext4 file systems. The VfsFirst process works with the fat32 file system, and the VfsSecond process provides the capability to work with the ext4 file system. The environment variables of programs that run in the contexts of the Client, VfsFirst and VfsSecond processes are used to define the VFS backends ensuring that IPC requests of the Client process are handled by the VfsFirst or VfsSecond process depending on the specific file system being used by the Client process. As a result, IPC requests of the Client process related to use of the fat32 file system are handled by the VfsFirst process, and IPC requests of the Client process related to use of the ext4 file system are handled by the VfsSecond process (see the figure below).

On the VfsFirst process side, the fat32 file system is mounted to the directory /mnt1. On the VfsSecond process side, the ext4 file system is mounted to the directory /mnt2. The custom VFS backend custom_client used on the Client process side sends IPC requests over the IPC channel VFS1 or VFS2 depending on whether or not the file path begins with /mnt1. The custom VFS backend uses the standard VFS backend client as an intermediary.

Process interaction scenario

Source code of the VFS backend

This implementation file contains the source code of the VFS backend custom_client, which uses the standard client VFS backends:

backend.c

Linking the Client program

Creating a static VFS backend library:

CMakeLists.txt

Linking the Client program to the static VFS backend library:

CMakeLists.txt

Setting the startup parameters and environment variables of programs

Init description of the example:

init.yaml

Dynamically configuring the network stack

To change the default network stack parameters, use the sysctl() function or sysctlbyname() function that are declared in the header file sysroot-*-kos/include/sys/sysctl.h from the KasperskyOS SDK. The parameters that can be changed are presented in the table below.

Configurable network stack parameters

Parameter name |

Parameter description |

|---|---|

|

Maximum time to live (TTL) of sent IP packets. It does not affect the ICMP protocol. |

|

If its value is set to |

|

MSS value (in bytes) that is applied if only the communicating side failed to provide this value when opening the TCP connection, or if "Path MTU Discovery" mode (RFC 1191) is not enabled. This MSS value is also forwarded to the communicating side when opening a TCP connection. |

|

Minimum MSS value, in bytes. |

|

If its value is set to |

|

Number of times to send test messages (Keep-Alive Probes, or KA) without receiving a response before the TCP connection will be considered closed. If its value is set to |

|

TCP connection idle period, after which keep-alive probes begin. This is defined in conditional units, which can be converted into seconds via division by the |

|

Time interval between recurring keep-alive probes when no response is received. This is defined in conditional units, which can be converted into seconds via division by the |

|

Size of the buffer (in bytes) for data received over the TCP protocol. |

|

Size of the buffer (in bytes) for data sent over the TCP protocol. |

|

Size of the buffer (in bytes) for data received over the UDP protocol. |

|

Size of the buffer (in bytes) for data sent over the UDP protocol. |

MSS configuration example: